Querying 10GB of data with PostgreSQL, pgAdmin and Docker Compose

Let’s imagine you have a dataset in CSV format you would like to run some SQL queries against.

The dataset is small enough to store locally on your machine, but large enough to make importing it into a database non trivial.

In this post, we will take a ~10GB CSV file and setup a local environment that lets us query it using SQL.

Approach

As always, there are a myriad of different ways to achieve our objective.

Our plan is:

- Download the CSV file

- Install and run PostgreSQL

- Install and run pgAdmin

- Create a table with the appropriate columns using pgAdmin

- Import the CSV into the table using pgAdmin

- Query the table using pgAdmin

- Do the above using Docker and Docker Compose

Get the data

Our dataset is the Seattle Public Library’s collection inventory, courtesy of City of Seattle Open Data and Kaggle:

- Login to Kaggle

- Navigate to the dataset via the link

- Download the zip

- Unzip the folder

- Should see an 11.8GB

library-collection-inventory.csv,

BibNum,Title,Author,...

3011076,"A tale of two friends / adapted by Ellie O'Ryan ; illustrated by Tom Caulfield, Frederick Gardner, Megan Petasky, and Allen Tam.","O'Ryan, Ellie",...

Import the data using Docker

In this section, we’ll adapt accordingly this guide describing how to setup locally PostgreSQL with Docker.

Pull the images

- Download the PostgreSQL image

docker pull postgres - Check the image is available

docker images | grep postgres - Download the pgAdmin image

docker pull dpage/pgadmin4 - Check the image is available

docker images | grep pgadmin

Start the containers

PostgreSQL

docker run \

--mount 'type=volume,source=seattle-dev-vol,target=/var/lib/postgresql/data' \

--detach \

--name seattle-dev-db \

--rm \

--env POSTGRES_PASSWORD=mypostgrespw \

--publish 5432:5432 \

postgres

Check the container is running docker ps.

Check a local volume seattle-dev-vol has been created docker volume ls.

This volume is mounted in the container at /var/lib/postgresql/data.

We could have done a bind instead of a volume mount, but volume mounts are the preferred mechanism for persisting data generated by and used by Docker containers.

pgAdmin

docker run \

--name seattle-dev-db-admin \

--publish 80:80 \

--env '[email protected]' \

--env 'PGADMIN_DEFAULT_PASSWORD=mypgadminpw' \

--rm \

--detach \

--mount 'type=bind,source=/path/to/csv/dir,target=/var/lib/pgadmin4/storage/mypgadmin_email.com' \

dpage/pgadmin4

Check the container is running docker ps.

pgAdmin lets you upload files but ours is too large (we can increase the maximum file size setting, but the request times out).

To make our CSV available to pgAdmin, we use a bind mount which is preferred to a volume mount because we only need to do the mount once (the first time we run the container).

The correct container path is actually

/var/lib/pgadmin/storage/mypgadmin_email.com but we don’t have permissions to

write there (we’ll take care of this later).

Test the containers

PostgreSQL

- Open a Bourne shell inside the container

docker exec --interactive --tty seattle-dev-db sh - Open a PostgreSQL shell

psql --username postgres - Display databases

\l - Display current database

\c - Display tables

\dt - Exit the PostgreSQL shell

exit - Exit the Bourne shell

exit

pgAdmin

- Navigate to

http://localhostin a browser - Login using email

[email protected]and passwordmypgadminpw

Create a table

- Get the internal IP address

<internal-ip>of the database,docker inspect seattle-dev-db -f "{{json .NetworkSettings.Networks }}" | grep IPAddress - Navigate to

http://localhostin a browser - Open the create server dialogue by clicking on servers in the sidebar

- Enter

myserverfor the server name, the host as<internal-ip>, usernamepostgres, passwordmypostgrespw - In the sidebar, Servers -> myserver -> Databases -> postgres -> Schemas -> public -> Tables -> Create -> Table to open the create table dialogue

- Create a table

bookwith the columns below

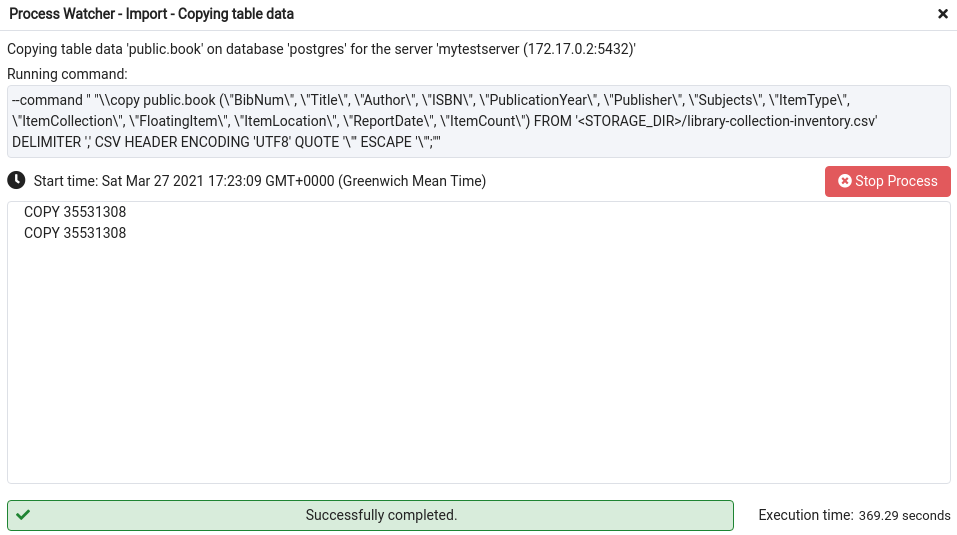

Populate the table

docker exec --interactive --tty seattle-dev-db-admin shcp /var/lib/pgadmin4/storage/mypgadmin_email.com/library-collection-inventory.csv /var/lib/pgadmin/storage/mypgadmin_email.com/library-collection-inventory.csv(takes a few moments)- From

bookin the sidebar, open the Import/Export dialogue:- Filename - select the CSV via the file browser

- Format

csv - Encoding

utf-8 - Header

Yes - Delimiter

, - Quote

" - Escape

"

After a few moments (minutes!):

Query the data using Docker Compose

Create a directory with one file docker-compose.yml

version: "3.9"

services:

db:

image: postgres

volumes:

- seattle-dev-vol:/var/lib/postgresql/data

environment:

- POSTGRES_PASSWORD=mypostgrespw

ports:

- "5432:5432"

db-admin:

image: dpage/pgadmin4

environment:

- [email protected]

- PGADMIN_DEFAULT_PASSWORD=mypgadminpw

ports:

- "80:80"

depends_on:

- db

volumes:

seattle-dev-vol:

external: true

Start the services docker-compose up.

Navigate to http://localhost; if you get CSRF errors, make sure all previous

tabs of pgAdmin have been closed.

If you need to recreate a database server in pgAdmin, you can set the host to be

the Docker Compose service name db rather than the internal IP address like

before.

In pgAdmin, you can run queries via the query editor, e.g.

SELECT "Title" FROM book LIMIT 3;

You can also run queries by opening a Bourne shell in the database container

docker-compose exec db sh then a Postgres shell psql --username postgres.

To stop the containers, docker compose stop.

To stop the containers and remove them, docker compose down.

If you run docker compose down then docker-compose up, you will have to

recreate the database server in pgAdmin.

Cleanup

- Delete the CSV

library-collection-inventory.csv - Delete the Docker volume

docker volume rm seattle-dev-vol - Stop and remove the containers

docker-compose down - Remove the images

docker image rm postgres dpage/pgadmin4